This paper is derived from the presentation ‘Potenziali impatti dell’applicazione dell’Intelligenza Artificiale nel Combat Management System’ held at Tiberio workshop, organized by Italian MoD, June 2019, Rome.

Introduction

In the frame of combat systems, the Command and Control System (C2) allows the Commander and their team to manage in near real-time: (i) system electronics, (ii) sensors, (iii) electronic warfare and (iv) effectors, in order to generate Situational Awareness (SA) and ensure the tactical control of the area-of-operations.

Artificial Intelligence (AI) is not new, yet recent advances in Deep Learning have spurred great excitement in the field. The research objective is to develop novel approaches based on AI and candidate solutions which can reduce information overload, improve situational awareness and support the decision-making process. In order to achieve this, it is important to identify constraints and goals in the use of AI, identify roles and problems for which AI is better suited, define the process and develop the tools needed for experimentation, define metrics for performance evaluation, and delineate applicable verification and validation procedures.

It is therefore essential to start a process of analysis and experimentation to verify that all relevant issues from an operational, technical and industrial standpoint are properly addressed. Some of these analyses / experiments will necessarily need the support of the end user, because any solution is doomed to fail if it does not adhere to concepts and expectations of the user. Moreover these innovative approaches strongly depend on the availability of real data, which is crucial for the appropriate training of AI algorithms.

Problem Definition

There are many reasons to investigate the potential benefits of AI application to defence systems, but the most relevant is that AI promises to improve the speed and accuracy of just about everything from logistics to battlefield planning and speed in this case is not about the velocity of an airplane or a munition. Speed is about decision-making, making the right decisions first and shortening the C2 cycle1.

Future scenarios will probably exceed current scenarios in terms of speed, number, and density of threats by including hypersonic and cruise missiles, unmanned platforms, stealthy aircrafts of the latest generation, and swarms of drones in multiple forms and sizes operating in teams. In such complex saturating scenarios, where everything from detection to coordination and synchronization is more difficult, the human cognitive capacity is overwhelmed and the human response time is not fast enough. AI, from a military perspective, can represent a significant force multiplier.

Brief Remarks on AI

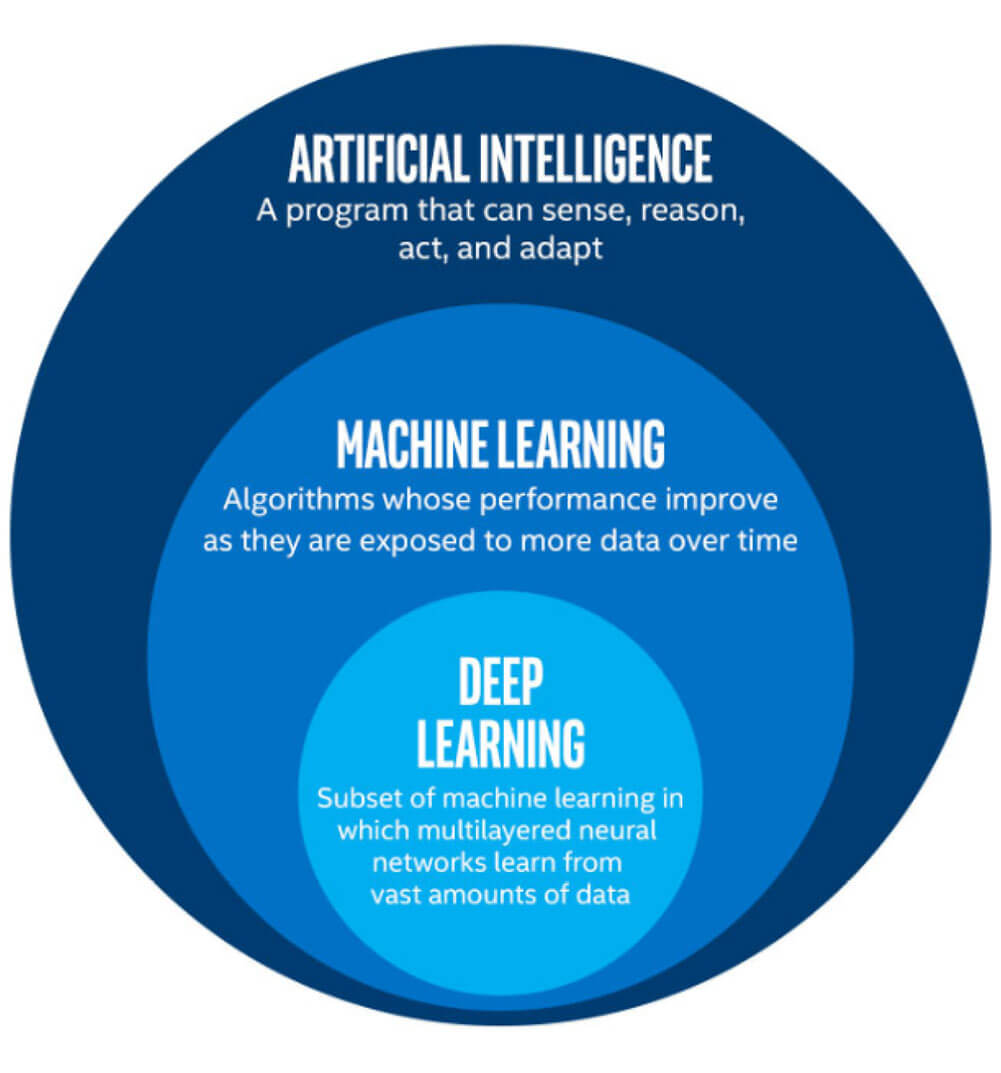

AI is a broad umbrella which encompasses Machine Learning (ML) and Deep Learning (DL)2. Figure 1 presents a simplified view of the relation among AI, ML and DL.

ML extracts knowledge from training data and applies this knowledge on new data: ML behaviour therefore depends on the quality of the training data and on how the new data relates to the training data.

DL is the subset of ML-based on multilayered neural networks which has triggered this latest revival in AI. DL is the best realization so far of a computational model which can address visual pattern recognition and natural language processing via hierarchical spatio-temporal machines inspired by biological models.

DL has surpassed human performance in specific tasks, yet ‘Machine learning-based systems can fall not only under ‘unanticipated situations’ or ‘when it encounters data radically different from its training set’ but also under normal situations, even on data that is extremely similar to its training set’3.

Flaws and fragility in ML algorithms keep popping out, e.g failures to classify an image when one or few pixels are modified4, failures to detect large obstacles in autonomous driving, or vulnerability to deception5.

ML behaviour is therefore not fully predictable and this is something which needs to be considered in military applications.

Problem Analysis

The adoption of a new technology, from an industrial point of view, is generally a burdensome activity. It is necessary to evaluate the impacts deriving from the inclusion of the new technology and carry out a cost-benefit analysis on all relevant aspects:

- Operational (e.g. change of paradigm, ease of use, training, etc.);

- Technical (e.g. complexity, performance, computational load, safety, etc.);

- Support (e.g. associated logistics, industrial maintenance, etc..).

Furthermore a new technology, for the end user, should exhibit features such as:

- Ensure better performance;

- Be predictable / understandable;

- Fail in a controllable manner.

Avoid behaviour which is unexpected / illogical / incomprehensible / irrecoverable.

Another issue to consider is that ML is only as good as the data it learns from; the data should be representative of the problem to be solved. It is expected that to make the AI algorithms work properly a large set of training data, and related correct ‘responses’, have to be set up and run; this is a huge activity that requires experience in conception of scenarios and the use of specialized software tools and computational machines for building and training the AI architecture.

A solution based on AI may be more or less comprehensible to the end user, e.g. if DL techniques are used the solution is a kind of Black Box and user understanding is pretty low; in fact internal processing is no longer available for inspection, analysis, modification or correction, under penalty of altering the behaviour of the algorithm and the need arises for new testing techniques (especially during formal acceptance tests).

Verification and Validation (V&V) is therefore a critical related issue for AI-based algorithms. In fact with respect to a conventional software code, the following issues must be considered for the application of V&V to an AI-based approach:

- Greater complexity;

- Lack of ‘transparency’;

- Totally different implementation techniques;

- Code Inspection not completely effective;

- Need for adaptive behaviour introduces additional level of complexity;

- Difficulty to rule out unwanted behaviour;

- Criticality of application of existing regulations and procedures.

To overcome the V&V issues reported above, a number of applicable strategies can be adopted such as:

- Expand testing cases and procedures;

- Adversarial Testing;

- Verification and validation of training data after the event;

- Preparation of behavioural maps;

- Improve Human Machine Interface (‘explainability of AI’);

- Constrained use and integration of the new technology.

In a nutshell it can be said that an AI approach has to consider the:

- Type of application and to what extent it can be entrusted to AI;

- Amount, quality and completeness of training data which is required;

- How to approach V&V;

- Level of human-machine teaming.

AI for Command and Control Systems

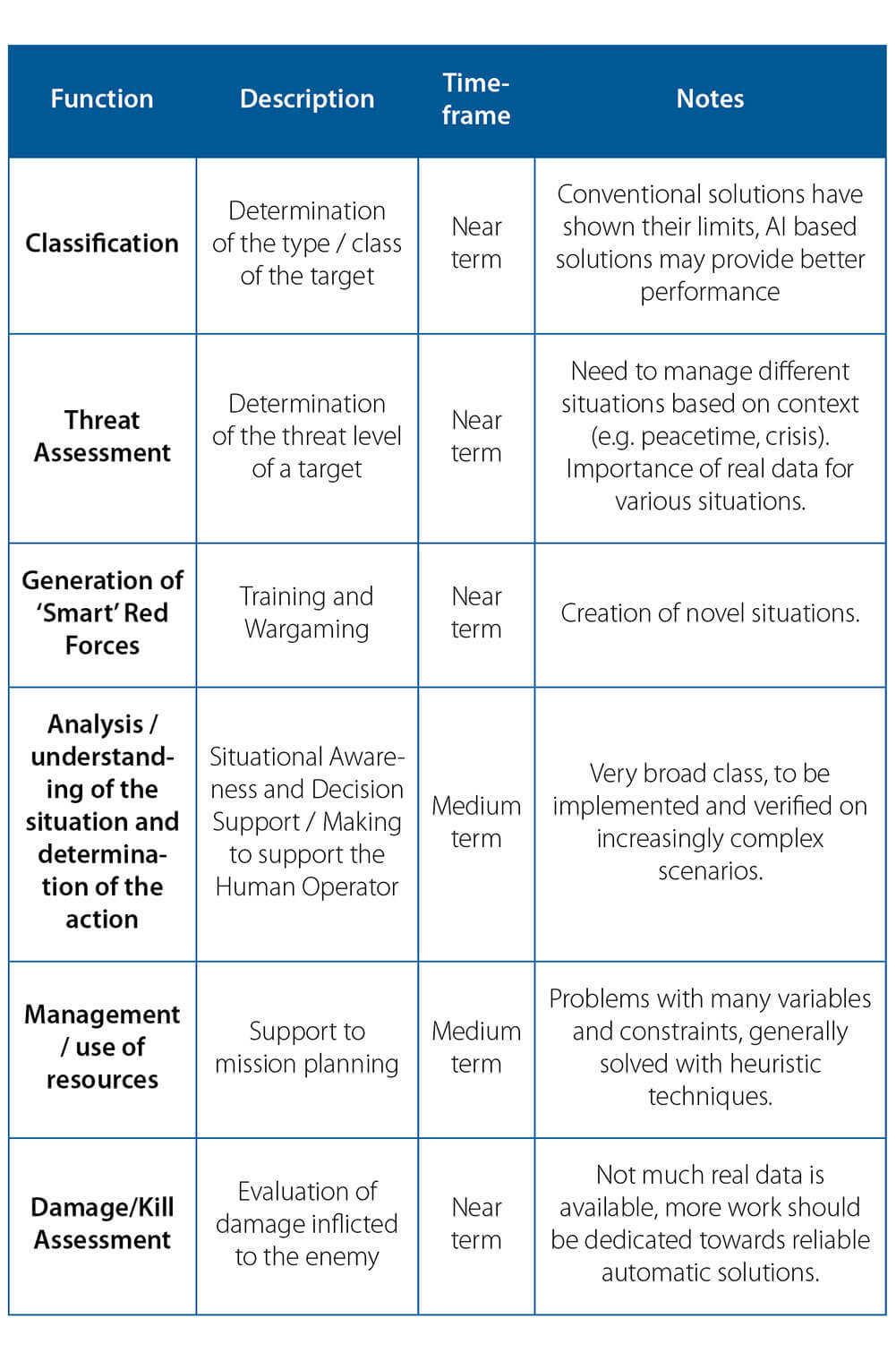

The study of the potential impact of AI for the C2 product has identified as one of the first steps the areas that appear suitable for AI introduction. Figure 2 presents a list of C2 functions potentially upgradable by the AI technologies with an indication of the temporal timeframe.

Tracks classification is currently one of the most promising areas of application since it shares one essential aspect of big data, i.e. there is a large number of tracks flowing in the system which must be assessed in near real-time and whose filtering is of paramount importance in order not to saturate the Operator and system resources. This topic is expanded in the next section.

Another area of potential interest is the generation of smart red forces to develop penetration testing of defences. An AI-powered red force can provide advantages such as adaptive threats which can better train the skills of the trainee and uncover gaps in the defence system.

A potentially interesting application is also the Kill Assessment (KA) of a threat engaged by on board effectors. Today KA is often entrusted to the Operator and this is a time consuming process; as a consequence this either limits the possibility of re-engagement in the case of fast threats or forces to the waste of precious ammunition if the assessment is not rapid enough. Saving ammunitions using an AI process is just one of the tangible benefits in addition to increasing a high-value target’s probability of survival through identification and closure of vulnerabilities.

ML for Classification

The determination of the type / class of the target is an important and critical issue in homeland and military defence since it is one of the main drivers in deciding the type of reaction. State-of-the-art fielded solutions generally encode Human Knowledge in the form of heuristics and they can be used as reference to measure the potential upgrade due to AI in terms of:

- Improvement of accuracy in response;

- Minimizing the number of false alarms;

- Minimizing response time.

Relevant factors in a classification problem are the separability of the classes and sensor limitations: three different cases may arise:

- Classes are not be separable in the observed dimensions;

- Classes are separable in principle but noise and limited resolution of the sensors may obfuscate such separation;

- Classes are separable even if noise and resolution of the sensors are taken into account.

Classification performance must of course be appreciated differently in each case.

Current results confirm that ML techniques are powerful at extracting knowledge from data and can improve the classification performance and reduce the response time with respect to conventional solutions; in fact the ML classifier provides excellent performance with very high precision and recall, yet this performance may still not be enough for stringent military requirements according to the case.

The ML classifier does not replace the legacy classifier but it operates in parallel to it. The legacy classifier enforces deterministic and probabilistic rules which codify human derived knowledge; the ML classifier brings in knowledge extracted from the training data. An intrinsic or extrinsic confidence is associated to the class determined by each classifier. The experiments on classification modules that use both hard-coded algorithms and ML methods combine the classifiers (ML, Legacy and external sources) in order to exploit the strength of each classifier and take into account the respective areas of consistent operation. Current results once again confirm that the combination of the classifiers achieves superior performance with respect to individual classifiers.

According to the classification problem at hand, the classifier may provide a fully automatic solution or provide data filtering and reduction capability which ease the Operator workload. Several parameters are available for fine-tuning the overall classifier performance.

As a final remark it is not foreseen to remove the Human Operator from the decision loop in mission-critical application.

Conclusions

AI has been around for decades and the field continues to show continuous progress even though there have been dark periods of reduced funding and skepticism. Recent advances in machine learning, notably DL, are now showing impressive results in consumer applications and have spurred a lot of enthusiasm and activity in the field. On the back of these advances the relevance of AI is being re-examined and experimented with in military C2.

The application of ML in the military is not straightforward due to the critical nature of military operations and also due to noise susceptibility and vulnerability to adversarial attacks of the technology. The ability to understand and explain the decision-making process in mission-critical applications is of paramount importance; ML solutions should be properly studied, trained, constructed, and managed so that they can earn the trust of designers and end users. The specificities of AI require that the integration of these technologies takes place only after all the necessary verifications are made and successfully passed.

DL techniques are basically still recognizing patterns in data, there is no understanding and no intelligence in a true human sense.

True AI is still ahead, yet AI is here to stay. The approach to AI and the expected impact on C2 today are mostly evolutionary. In the long run they may gradually become more and more revolutionary, and change the way things are done, information is approached, and operations are performed and also the way in which systems are designed. For these reasons it is important to continue to monitor, investigate, and experiment with advances in the field of AI and learn how to most effectively deploy AI.