Introduction

Decision-making problems, one of the main research areas of management science, have been studied in a highly-interdisciplinary manner by the contributions of the scientists and engineers from various research fields. Advances in Artificial Intelligence (AI) and utilization of AI systems in the decision-making realm led to a new era, where human decision authority is increasingly being delegated to machines. More than two decades ago, game and interaction-science researchers, as well as chess fans, were thrilled by the defeat of the chess grandmaster Garry Kasparov by Deep Blue1. Since the striking newsflash, not only the computing power of machines, but also decision models and programming algorithms have drastically improved. By the time the chess match was carried out, Deep Blue could evaluate 200 million possible moves and predict 20 steps ahead in every single move2. Modern intelligent machine capabilities reach far beyond that, becoming a prevalent part of popular science discussions of professionals, including the military. However, the conceptual framework of AI and its subsidiaries are not commonly well-perceived.

NATO’s innovation team3 notes the importance of building a resilient innovation pipeline, and points out the first adopter advantage for emerging disruptive technology. The development of this technology could not be more prevalent in the world of geopolitics and deterrence today. Two core areas; addressing fragmentation of researchers, academia, start-ups and governments at the beginning of this pipeline (managing uncertainty), and being able to adopt and scale these new technologies as and when they are ready are emphasized as core areas of concern4. A recent article by the innovation unit, citing a RAND report5, distinguishes between types of applications (Enterprize AI, Mission Support AI, and Operational AI) and discusses new and well-designed principles and practices relating to good governance and responsible use6. Therefore, it is vital for military professionals to acquire a solid recognition of AI systems and accompanying new-breed decision-making concepts, as part of the first adopter endeavour.

Learning and Decision-Making Machines

During the last half-century, intelligent systems have become inevitable elements of human life. There is an ongoing and accelerating trend of transferring tough decisions to intelligent systems. Humans leverage the acquired comfort of this hassle transfer, especially where prompt judgments under complex and stressful environments need to be executed. Machines have proven to be more efficient and consistent under such conditions. Financial markets have been one of the early and proven examples of algorithms taking over the decision authority from human actors, with the ever-increasing volume of high-frequency algorithmic transactions. In a Bloomberg article, NASDAQ, one of the most efficient stock markets with more than 20 million transactions taking place every day7, was referred to as ‘belonging to the bots’8. In the corresponding science literature there has been a century-wide spread of research on organizational structures, data processing tools and techniques, the benefits of technology to decision-making, as well as potential harms of utilizing decision supporting machines.9 Although this phenomenon has been investigated for a relatively long period of time, breath-taking innovations observed in the field of big data analysis and Machine Learning (ML) have led commercial and public sector researchers to convey their focus to research and development efforts to the field in the last few years. Advanced algorithms, large data sets and systems with high processing power facilitating efficient calculations and inference opportunities using these data sets, enabled the execution of complex tasks that it was previously believed would always require human intelligence.

Turkish mathematician Cahit Arf (Professor Ordinarius), in a public conference aimed to spread university studies to the public perimeter in Erzurum – 1959 pointed:

‘(…) we would then say our brain can solve problems it has never come up with before, or at least we think it did not. However, machines don’t have this. I believe the brain’s characteristic attribute is its capability of adapting to new, or what we believe is new conditions. Therefore, we need to be willing to understand this: can a machine with adaption capability be built; in other words, a machine that may solve problems that were not explicitly considered while it was being designed, and how? (…)’ 10

Since the speech of Arf more than six decades ago, the same question stands. The frontrunners of popular science discussions; AI, ML, and Deep Learning (DL) have been vague concepts, which indeed do not have strict boundaries between each other. Although the notion of AI was first introduced in academia during the late 1950s, the survey on the perception ability of machines is far older. It is well admitted that computers can carry out logical transactions. However, the question of whether machines can think has always been controversial.11 The widespread definition of AI has been the ‘ability of a computer or machine to mimic the capabilities of the human mind.’12 On the other hand, ML, a branch of AI, focuses on building applications that learn from data and improve their accuracy over time without being programmed to do so.13 A relatively emerging concept and a subset of ML, DL multi-layered neural networks as described by IBM are modelled to function like the human brain and learn from large amounts of data. The main distinctive feature of DL, with respect to ML, is the ability to learn and reason without the need for structured and/or tagged data. Dictation applications, for instance, were trained on words and phrases about a decade ago, while current widespread applications such as Apple Siri, Amazon Alexa and Google Assistant may recognize voice commands without a pre-training requirement.14 Briefly, without strict set boundaries, DL may be defined as a subset of ML, which is a subset of AI. As may be inferred from the above-mentioned definitions, the ever-evolving systems, algorithms and emerging capabilities let machines train without a need for being explicitly programmed, nor based on previously determined conditions which act autonomously with the entitled self-learning abilities. The high processing power and large data sets of the current era is what enables self-learning of machines possible. Machines, in this process, may reach inference from what they learn or serve as a decision support system by providing the results to the operator. When the necessary conditions are met, they may act autonomously and without external operator interference. Following the decisions made by the machine, typical feedback algorithms may be used to optimize those same algorithms and the systems. Data produced by humans, specifically personal data, is being increasingly exposed to learning algorithms utilizing big data analysis and ML/DL systems (i.e., search engines, targeted advertisements, and movie recommendations). Successful high-grade graduates of relevant university programmes are hired by technology firms with titles beginning with ‘data-’ and paid higher salaries than most government and military specialists. This subsequently impacts the survey of AI and ML specialists for defence projects.

The Advent of Deep Learning

The notion of DL is a relatively new concept that stepped strikingly into the ML realm in the last decade. The reason for this late introduction is the requirement of state-of-the-art neural network algorithms being fed by amounts of data large enough from which to actually learn. High digitization of society, leading to large amounts of data fusion, has let researchers utilize computer hardware with high computational power to exploit big data, which in due time drew the attention of governments and military institutions. During this fast process of DL development, algorithmic leaps have recently opened up new aspects for processing large batches of data. As the architects of these advancing state-of-the-art systems, humans are at a decisive point whether to delegate authority to machines to take action without interference in critical situations or not. This becomes even more crucial in fields such as healthcare and defence. There are many applications of widely researched DL areas such as natural language processing, semantic analysis, pattern recognition, and demographic analysis that are used for military intelligence. Nonetheless, it is relatively harder to predict the employment of learning algorithms in autonomous decision-making systems in the military.

An early example of autonomous machines, with a very narrow error margin, has been self-driving cars. In March 2018, the New York Times reported a fatal accident caused by a self-driving ‘Uber’ in Arizona, which was recorded as the first pedestrian death associated with self-driving technology.15 This worrisome incident unveiled reasons to survey gaps and search for solutions on the reliability of intelligent machines and in the fields of ethics and justice. The answer to the question of how to account for damages caused by autonomous systems is still vague. On the contrary, predictive policing is an emerging notion from the law enforcement side of the machine learning realm, where quantitative techniques16 or ML models are utilized to identify ‘likely’ targets for intervention and prevent crime, or solve past crimes.17 The use of machines for the prediction of crime areas and potential criminals, reminding us of the movie ‘Minority Report’18, obviously brings along ethical considerations as well.

Harnessing AI

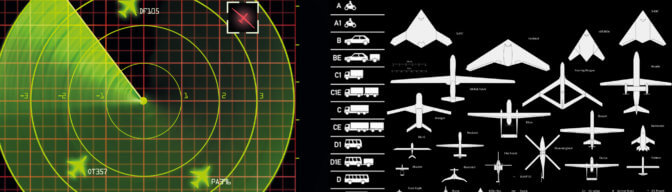

In the Air and Space domains, ML applications gained traction with the introduction of a new generation of manned and unmanned platforms. Exponentially increasing volumes of data, being collected from multiple sensors, gave rise to the requirement for solutions to infer meaningful output from the raw and complex data. Literature in academia has already concluded that DL applications outperform classical statistical techniques in several areas (i.e., Maintenance, Repair and Overhaul [MRO]) of air platforms.19 The United States Department of Defense publication of 2018, ‘Harnessing AI to Advance Our Security and Prosperity’ argues that evolving technology has the potential to alter the structure of the battlefield in the near future and will introduce new challenges and threats. Emphasizing the potential AI technology breeds, the study supports the widely accepted idea of multidisciplinary and inter-organizational collaboration among public, private sector and academia partnerships for successful results.20 It is inevitable that DL applications will be employed to harness data from the high-volume raw data pool generated by, initially friendly, new generation platforms. The inherent ability of these algorithms to classify and process untagged data, and the high efficiency of natural language and image recognition applications draws attention to the potential defence-focused uses starting with the intelligence domain, and beyond. Limited studies of DL uses in military intelligence indicate the already increasing efficiency of proposed applications.21 DL enables innovative and inspiring solutions such as audio-based drone detection leveraging the high data processing power instead of visual recognition.22 The increasing acknowledgement rate of AI, ML, Big Data, Human on the Loop (HOTL), Human in the Loop (HITL), Human out of the Loop (HOOTL) as new and/or emerging technologies in documents depicts the future battlespace environments23. HOTL, for example, could be argued as a solution to overcome the ethical liability prospects in conflict. This proposes an AI system can provide management options in battle, compliant with the rules of engagement, and give humans the possibility of vetoing options to ensure meeting the ethical requirements 24.

Conclusion

Big data and learning algorithms are being utilized by governments, military institutions and commercial organizations at an ever-increasing rate. Innovations observed in the implementation of decisions by AI range from autonomous vehicles to smart speakers, medical diagnosis to crime mapping, autonomous commercial Unmanned Aerial Systems to military fighter platforms. The drastic and expedited shift in decision authority ownership brings about ethical and judicial issues, and the requirement of swift orientation by decision makers at every level. In a not-so-distant future, not only will the sensing and computing capabilities of AI systems keep increasing at an exponential rate, but also systems may gain the capacity to mimic social and emotional capabilities of humans and make decisions indistinguishable from their human counterparts.

Playing an important role in establishing interoperability standards and norms of use in the military applications of artificial intelligence25, NATO is on the verge of keeping the technological advantage and being the early adopter, as well as striving to meet the moral requirements which might not be the adversary’s primary concern. The vital point to query through this process is not the accuracy of the decisions made by machines, but the consequences of erroneous decisions on ethical and judiciary grounds. Some decisions are as difficult to delegate to machines as they are complicated to be made by humans. The Trolley Problem26 lays out the dilemma of utilitarianism versus deontological ethics very well by the illustration of self-driving vehicles in an inevitable crash scenario. Considering the consequences of critical decision problems in the military realm, which could be vital in the battlefield, the risks as well as the advantages of AI should be strictly evaluated. These relatively new operational decision-making actors portend a dramatic change of battlefield dynamics, which will take place starting with the shared authority allocation. Human actors will gradually become subsidiaries as the domains become more highly complex, involving numerous constraints and requiring tough and rapid decisions. The state-of-the-art AI systems, which have now gone far beyond Deep Blue beating a Chess Grandmaster, are prone to be the new commanding entities of the battlefield with the capability of evaluating high-volume data-derived outputs in milliseconds, meaning faster and most of the time more accurate and robust decisions. Every emerging platform will potentially increase the requirement to delegate the decision authority to machines further. Sustaining a competent Air and Space Power in the future is only possible by considering the influence of new decision makers on the battlefield, adapting the traditional C2 structure accordingly, and leveraging a synergy of collaboration between governments, commercial actors, and academia.