ISR: from Data to Comprehension

The info-operational environment in which we are immersed is characterized by conflicts that span the entire spectrum of the competition continuum,1 including all possible combinations of conventional, asymmetric and hybrid operations.

Our military organizations have faced the changing intelligence and C2 environments by evolving a specific guideline: enhancing the information and decision-making processes and progressive decentralization. This approach was based upon a powerful assumption: information enables understanding, and understanding enables decision-making.

The Italian Air Force (ITAF), as with other Air & Space Components, follows this guideline, especially in the ISR field. As a pioneer in this evolution, it has discovered counter-intuitive evidence: the more both quality and quantity of information are improved and decentralized, the more evident it becomes that information doesn’t necessarily enable understanding, and understanding doesn’t enable decision advantage.

Hopping into the ‘Rabbit Hole’: a Theoretical Approach to ISR

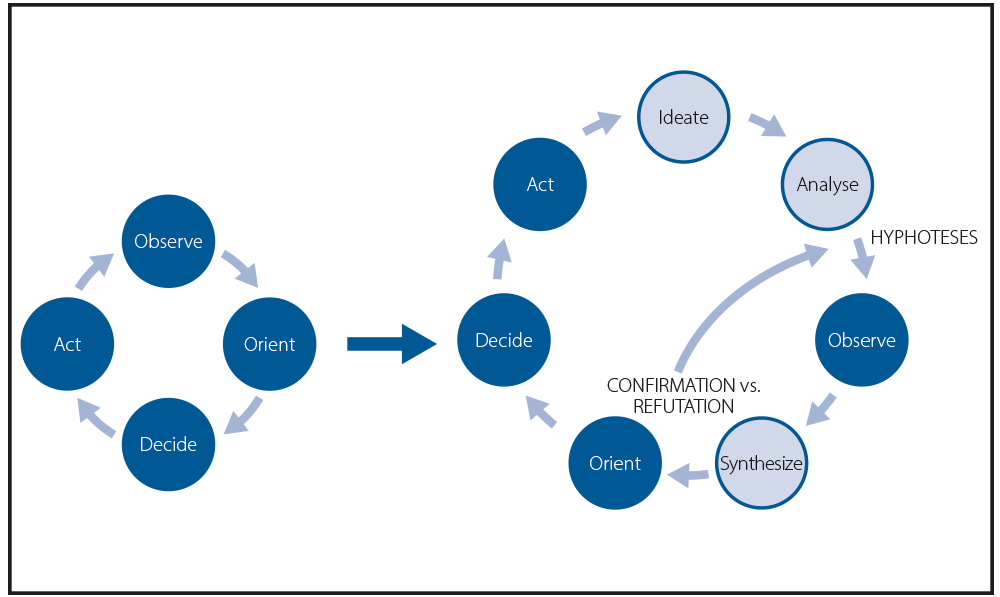

One of the most interesting passages of J. Boyd’s thinking is when he identifies the ‘Synthesis-Analysis’ or ‘Induction-Deduction’ interaction2 which is the starting point of the understanding process we use ‘to develop and manipulate concepts to represent observed reality’.3 Orienting the following Decisions and Actions, this idea brings us to the so-called ‘induction problem’, long-debated before Boyd on how many observations are required to arrive at a synthesis capable of predicting how a scenario will develop (in order to orient decisions and actions)? One, ten, a hundred, a thousand?

K. Popper stated that ‘the belief that we can start with pure observations alone […] is absurd’,4 because ‘Observation is always selective. […] It needs a chosen object, a definite task, a point of view, a problem’;5 thus, it is impossible to understand reality inductively. In Popper’s view, the creative, intuitive element is at the beginning of any attempt at understanding, so even if we are not directly aware of it, the OODA loop never really starts from an observation.

Proceeding with the thought process, we can hence identify a more realistic (i6)(a7)O(s8)ODA loop: there is always an ‘Ideate’ phase before an observation, even if implicit or hidden. In his words, ‘[…] it is the […] theory which leads to, and guides, our systematic observations […]. This is what I have called the “searchlight theory of science”, the view that science itself throws new light on things; that […] it not only profits from observations but leads to new ones.’9

Before an Observation, another process also intervenes where we start from an idea, a postulate, or a general theory and then we draw conclusions on the phenomenon that should logically derive from the initial idea. K. Popper identified this process and we may refer to it as the ‘deduct’ or ‘analyse’ phase, yet bearing in mind there is an essential distinction from the term used by J. Boyd. For K. Popper, deduction precedes observation, as ‘without waiting, passively, for repetitions to impress or impose regularities on us, we actively try to impose regularities upon the world. We try to […] interpret it in terms of laws invented by us’.10

Only then, can the observation phase start fulfilling its core role, namely disproving our assumptions. ‘These may have to be discarded later, should observation show that they are wrong. This was a theory of trial and error, of conjectures and refutations’.11 The most important information is the information that falsifies the hypotheses, inspiring the most correct decisions. The rest could be useless data at best, toxic details at worst. Finally, as anticipated at the beginning of the chapter, the ‘Synthesize’ phase comes into play to enable the Orient phase.

So, why is this (i)(a)O(s)ODA loop so difficult?

Process of Understanding Human Cognitive Bias Barriers

The last few decades of progress in cognitive psychology allowed us to identify the main biases hindering our process of understanding. From the most famous ‘confirmation bias’ to the ‘WYSIATI12 bias’ to the inability to correctly frame statistical problems (i.e. regression to the mean13 and law of small numbers14), ending with heuristics and other biases (i.e. substitution15 cause and chance,16 affect17).

Furthermore, as humans, we cannot reliably convert information because:

- we tend to underestimate the chance and irrationality of occurrences;

- we often fall into the ‘narrative fallacy’ trap;18

- we are at the mercy of the ‘ludic fallacy’, which consists of comparing risks and opportunities derived from chances similar to those of gambling.19

Finally, among other powerful human biases, we should not forget Taleb’s ‘round-trip fallacy’,20 meaning the systemic logic confusion between statements made in similar terms but with totally different meanings.21

The analysis of cognitive biases helped us identify why the starting assumption22 is now at stake. Before the digital revolution and the rise of high-density info-ops environments, human cognitive biases were thwarted by military-specific organizational workarounds: a centralized and pyramidal model with an interaction at the top between a Commander and their Headquarters. This model successfully stood the test of time. It was the filter of the different hierarchy levels and the dialectic between the two figures that mitigated, most of the time, the cognitive biases leading to potentially flawed decisions.

As we previously said, the advent of the digital revolution led us to think that the consequential huge information density could be managed by decentralizing and accelerating the decision-making process at ‘the speed of relevance’.23 Nonetheless, it is a partial solution that brings to the table an even greater issue, which in doing so; we lose an effective dam against cognitive biases.

Obstacles to Understanding: AI24 is Not the Silver Bullet

Given the framework described in the previous paragraph, great expectations25 are imposed upon the use of Artificial Intelligence (AI) in the ISR and decision-making processes. We must be aware of the risk that while trying to avoid human biases, we could fall boldly into those biases typical of AI. AI biases have the potential to be even more dangerous and subtle than human biases, which are categorized into two distinct areas:

- AI predictions26,27 always represent pre-existing data processing and thus are blind to novelty and exceptions (again…the induction problem)! They will always be a future version of…the past. This means that even the best AI algorithm, if not properly handled, could be of little use when we need it the most (i.e. to prevent a surprise on the battlefield).

- Human biases can be ‘exported’ in their entirety into the AI tool that we are counting on (i.e. coding) to rescue us from those very same biases. This risk, theoretically identified by K. Popper almost a century ago28 has materialized today with particularly harsh social consequences.

ISR: A New Paradigm to Orient Decisions and Actions

For information to lead to a decision advantage, airborne ISR must be enlightened by new awareness: current organizational and training models have noticeable limits.

The conceptual guideline behind our intelligence and C2 process is based upon the necessity to accelerate and decentralize decision-making: static Command and Control chains are outdated tools and need to be replaced by web chains capable of adapting rapidly and autonomously based on a single priority: mission understanding and operational environment comprehension. To realize such a change, it is necessary (both conceptually and technically) to transform the quantity of information into quality of understanding. Although easier said than done, ‘technological capabilities depend on complementary institutions, skills and doctrines’;29 thus, new skills must be developed so that the contribution of AI reduces, rather than increases, the potential effects of toxic information. Furthermore, militaries must be informed and trained cognitive psychology to effectively be able to diagnose human intelligence biases and leverage AI to compensate for our weaknesses.

The human element could then fully exploit Big Data and AI, properly assisted by ‘graceful degradation’ systems,30 becoming the main character in designing new theories, hypotheses, and scenarios to orient Analysis and Observation, detecting what is ‘normal’ (confirmation) and what is potentially an ‘anomaly’ (refutation).

These ‘anomalies’ will be our ‘superior pieces of information’, allowing us to predict events evolving along completely new and previously unknown scenarios (i.e., Machine Learning irrelevant). To identify them, we must train and utilize the human mind for its most peculiar and irreplaceable expertise: emotional intelligence, creativity, empathy, ability to consider elements of irrationality, randomness, and madness.31 Characteristics are ultimately aimed at ‘creating’ and ‘identifying’ exceptions, and hence predictions.

To conclude, to find ‘superior pieces of info’ starting from terabytes of raw and unprocessed data, it is necessary to exit from the legacy dichotomy between human and artificial intelligence. We must bring the human back to the centre toward forms of ‘humanly enhanced Artificial Intelligence’, or the so-called human-machine teaming.32 Machine augmentation will ultimately forge a more cognizant human being.33