“In three years, Cyberdyne will become the largest supplier of military computer systems. All stealth bombers are upgraded with Cyberdyne computers, becoming fully unmanned. Afterwards, they fly with a perfect operational record. The Skynet Funding Bill is passed. The system goes online on August 4th, 1997. Human decisions are removed from strategic defense. Skynet begins to learn at a geometric rate. It becomes self-aware 2:14 AM, Eastern time, August 29th. In a panic, they try to pull the plug.”

Quote taken from the movie ‘Terminator 2 – Judgment Day’

Introduction

To overcome current limitations of Unmanned Aircraft Systems (UAS), more and more automatic functions have been and will be implemented in current and future UAS systems. In the civil arena, the use of highly automated robotic systems is already quite common, e.g. in the manufacturing sector. But what is commonly accepted in the civilian community may be a significant challenge when applied to military weapon systems. Calling a manufacturing robot ‘autonomous’ can be done without causing intense fear amongst the public. On the other hand, the public’s vision of an autonomous unmanned aircraft is that of a self-thinking killing machine as depicted by James Cameron in his Terminator science fiction movies. This then raises the question of what an autonomous system actually is and what differentiates it from an automatic system.

Defining Autonomous

Autonomous in philosophical terms is defined as the possession or right to self-government, self-ruling or self-determination. Other synonyms linked to autonomy are independence and sovereignty.1 The word itself derives from the Greek language, meaning literally ‘having its own law’. Immanuel Kant, a German philosopher of the 18th century, defined autonomy as the capacity to deliberate and to decide based on a self-given moral law.2

In technical terms, autonomy is defined quite differently from the philosophical sense of the word. The U.S. National Institute of Standards and Technology (NIST) defines a fully autonomous system as being capable of accomplishing its assigned mission, within a defined scope, without human intervention while adapting to operational and environmental conditions. Furthermore, it defines a semi-autonomous system as being capable of performing autonomous operations with various levels of human interaction.3

Most people have an understanding of the term ‘autonomous’ only in the philosophical sense. A good example of the contradiction between public perception and technical definition is that of a simple car navigation system. After entering a destination address as the only human interaction, the system will determine the best path depending on the given parameters, i.e. take the shortest way or the one with the lowest fuel consumption. It will alter the route without human interaction if an obstacle (e.g. traffic jam) makes it necessary or if the driver turns the wrong way. Therefore, the car navigation system is technically autonomous, but no one would call it that because of the commonly perceived philosophical definition of the term.

Public Acceptance

Because of this common understanding of the term ‘autonomous’, the public’s willingness to accept highly complex autonomous weapon systems will most likely be very low. Furthermore, the decision to use aggressive names for some unmanned military aircraft will undermine the possibility of acceptance.

Since it is unlikely that the public’s perception of the classic definition of the term ‘autonomous’ will change, we must change the technical definition of what the so called ‘autonomous’ systems really are. Even if a system appears to behave autonomously, it is only an automated system, because it is strictly bound to its given set of rules, as broad as they might be and / or as complex the system is.

Thinking Machines?

Calling a system autonomous in the way Immanuel Kant defines Autonomy would imply the system is responsible for its own decisions and actions. This thought may be ridiculous at the first glance, but based on this premise some important aspects of future UAS development should be considered very carefully. How should a highly automated system react if it is attacked? Should it use only defensive measures or should it engage the attacker with lethal force? Who is legally responsible for combat actions if performed automatically without human interaction?

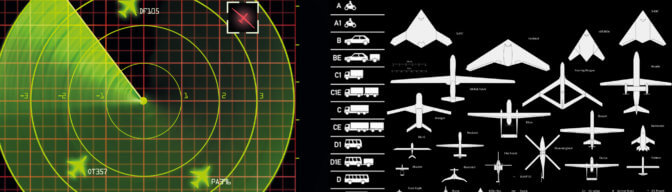

International Law on Armed Conflict has no chapters concerning autonomous or automated weapon systems. Fortunately, there is no need for change as long as unmanned systems adhere to the same rules that apply to manned assets. This implies that there is always a human in the loop to make a final legal assessment and decision if and how to engage a target. Although software may identify targets based on a given pattern which can be digitised into recognisable patterns and figures, it cannot cope with the legal aspects of armed combat which not only require a deeper understanding of the Laws of Armed Conflict but also consideration of ethical and moral factors.

Conclusion

The current stage of technology is far from building autonomous systems in the way it is literally defined and it’s doubtful this level of development will be reached in the near term. The approach to create a technical definition, separate from the one that already exists that is based on the classic, commonly used one will only cause confusion.

Therefore, the current use of the technical term ‘autonomous’ should be changed to the term ‘automated’ to avoid misunderstandings and to assure the use of the same set of terms as a basis for future comprehension. The definition of automated could be subdivided into several levels of automation, which includes fully automated as the top level definition. This would be used for highly complex systems which are incorrectly called ‘autonomous’ today.

But even fully automated systems must have human oversight and authorisation to engage with live ammunition. Due to ethical and legal principles, decision making and responsibility must not be shifted from man to machine, unless we want to risk a ‘Terminator’ like scenario.